Series 1 – Collecting files

These last few months I’ve been working in create a ETL process in PowerShell to replace a DOS batch process that collect a Report Server third-party application log data. During my analysis of how this DOS process works I discover this process was losing data, The log records in contains different type of records making the number of columns varying between 12 to 15. At the same time losing data due to using the SQL Server BCP utility to bulk copy data in a SQL Server data.

Here is were the magic of PowerShell brings to the table. This is our chance to be creative.

Collecting Log Data

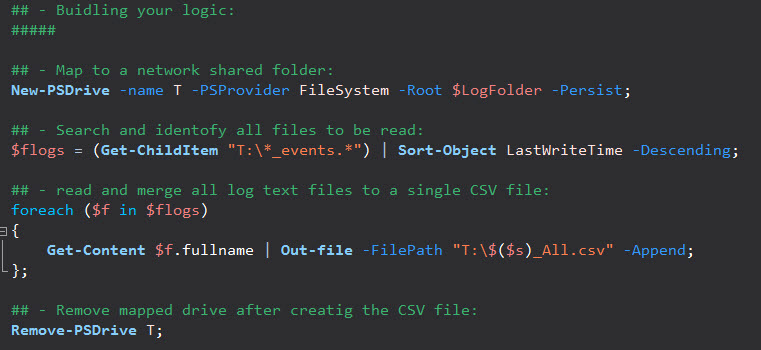

In its basic form, we need to connect to the report server and access their log folder. We do this by creating a network map to the drive which in must cases could be a shared folder.

[sourcecode language=”powershell”]

New-PSDrive -name T -PSProvider FileSystem -Root $LogFolder -Persist;

[/sourcecode]

You can use the Cmdlet “New-PSDrive” to define your drive letter mapped to your log folder. Yoiu need to define its parameter -PSProvider as “FileSystem” and you could make it to Persist during your PowerShell session.

Keep in mind, as you possibly going for more than one server, you will top remove and map the drive again. This is as simple as using the following Cndlet:

[sourcecode language=”powershell”]

Remove-PSDrive T;

[/sourcecode]

We created drive letter T: and so now we removed it from you session.

Looking for Log files

So after the drive gets mapped to T: drive, we need to look and collect the type of logs we want to pull. In my scenario, I’m looking for all log labeled “*.Events.*.log.*”. One caveat discovered previously, these text logs file doesn’t contains servername information. But, No Problem! This is another opportunity to be creative with PowerShell.

Here we use the Cmdlet “Get-ChildItem” with the “Sort-Object” to sort the results by its object property “LastWriteTime“. You will file this property very useful later as you progress in our data collection process. This results set wil need to be stored in PowerShell Object

[sourcecode language=”powershell”]

$flogs = (Get-ChildItem "T:\*_events.*") | Sort-Object LastWriteTime -Descending;

[/sourcecode]

Building your CSV files

Next step will be to collect all logs from the server by merging all files into one text CSV file. For this step we use the Cmdlet “ForEach” in its script block format and also the Cmdlet “Get-Content” used to append to a CSV final output file using the Cmdlet “Out-File” with the “-Append;” parameter.

[sourcecode language=”powershell”]

foreach ($f in $flogs)

{

Get-Content $f.fullname | Out-file -FilePath "<strong><span style="color: #333399;">T:\$($s)_All.csv</span></strong>" -Append;

};

[/sourcecode]

Notice that I got a PowerShell variable defined to hold the server name. *hint*

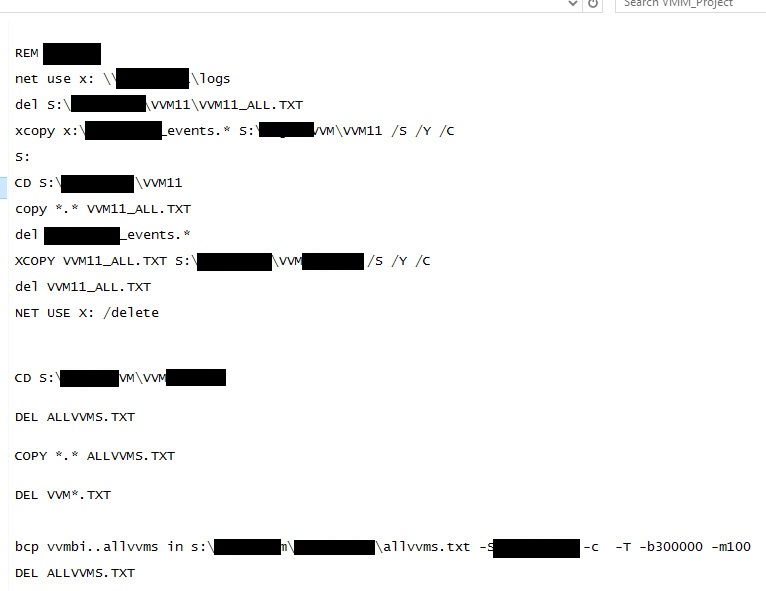

Now, here’s a comparison between sample DOS batch file previously use vs. the PowerShell basic script.

DOS batch sample

PowerShell basic code sample

Keep in mind, that you’re building a puzzle. There’s a lot more logic to be added to this code.

Be creative!

What’s Next?

Next blog I’ll be covering reading, and importing the CSV file to a PowerShell object before reaching its final destination on a SQL server..